Visualizing an AI-native architecture for multi-modal software workflow agents

Understanding the architecture and technical nuances of autonomous workflow agents powered by multi-modal models

Motivation

After writing a series on explaining the current state of traditional autonomous software workflow agents through part 1 focussed on the impact of multi-modal large language models on existing tools, followed by part 2 & part 3 focussing on how such agents can be effectively designed keeping various factors in mind such as product, UX, data, systems, cost of ownership, I wanted to sum up by discussing a vision for what the next generation of workflow agents look like, from a technical lens based on all the latest research on multi-modal language models, tying the existing gaps and user journey to viable solutions that address the problem statement and pain points. The article takes a real life example of an autonomous agent for a financial services use case in context to better explain how such agent systems can be built & architected with multi-modal models.

Moving from analysis to action

2023 has seen a massive advancement in the quality and efficiency of language models of all sizes being implemented at scale with cloud based models such as GPT 4 from OpenAI, Claude 2 from Anthropic, Command from Cohere etc. as well as open source models such as Llama 2 (Meta), Mistral 8x7b and many more listed on the Open LLM leaderboard on Huggingface. Language models seem to have the capability to predict the next best token to conduct sentence completion, hold a chat conversation, summarize large text chunks, generate and understand images and perform basic reasoning from the context given. While doing so, they can also perform basic actions such as a web search with Bing, spin up a Python environment for code execution etc. However, the ability of agents to interact with the real-world by calling existing apps and services gives them "arms and hands" to automate various business processes that make users far more productive. Action is an area for advancement that has the capability to create value for end users.

Understanding the evolution in the stack

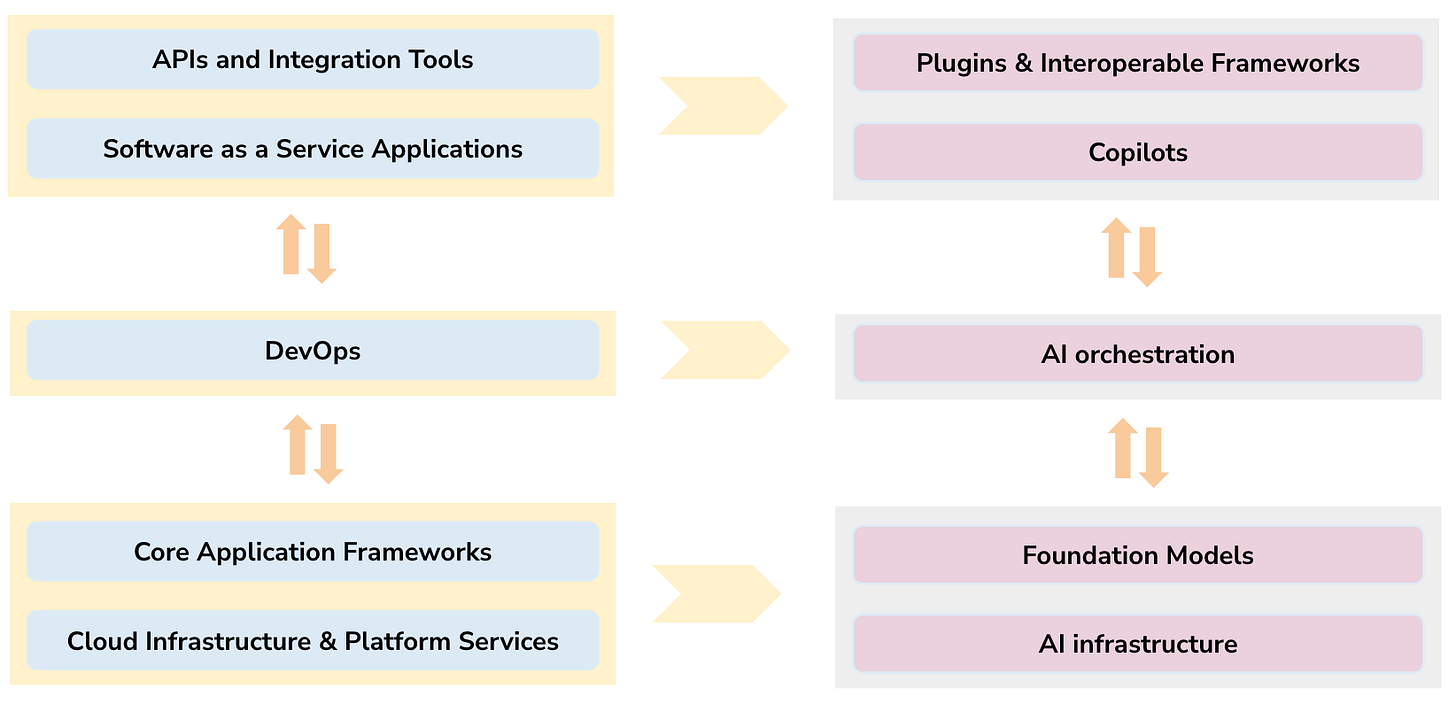

Andrej Karpathy recently discussed the idea of an LLM OS on X, which is a much finer picture to the Semantic Kernel Architecture built by Microsoft, that gives a model implementation “arms & legs” to perform user actions. The evolution from cloud infrastructure, app frameworks, DevOps, SaaS applications and APIs / integration tools has found its AI equivalent with an updated architecture drawn out by Microsoft, as showcased below. This then creates a pathway for a new set of co-pilots with updated capabilities that enable them to interface with existing and new apps and services to improve user productivity.

Action driven agents for enterprise knowledge workers

Let’s dive deeper into how action driven agents add value to enterprise knowledge workers. Knowledge workers leverage software orchestrated on computing devices, commonly the desktop and mobile which constitutes the majority of manual work today, thus creating an opportunity for the most value creation. The recent release of AutoGPT paved a great first stepping stone for the community to envision how agents can break down complex tasks into simple steps and then interface with apps & services hosted on the internet to complete those tasks autonomously. While this is a great start, we have come a long way in building better ingredients through research in various core functionalities that can power the new generation of workflow agents.

Before we dive in, let’s understand the user journey for a workflow agent in its capability to observe & identify an existing process, build the agent flow / logic and finally deploy it, while also fine tuning it based on user interaction.

Taking a real life knowledge worker example…

To put the above in context, let’s take an example of an actual process that occurs within a sell side or asset management vertical at a large bulge bracket bank or a hedge fund, engaging in foreign exchange trades wherein a foreign exchange trader sells $100,000 USD in exchange of $140,000 CAD, at a specific rate of 1.4 CAD / USD and sends an email to a trade settlements desk analyst to get compensated for the trade, as well as ensures that the trade is settled. This is a lengthy and arduous process that has the following steps:

Let’s use this example to understand the AI agent capability needed to fully accomplish this task and the technologies available to power this.

Observing via Multi-Modality & Comprehension

Taking the example above in context, an autonomous workflow agent powered by a multi-modal model should be able to:

Watch Email Queues and identify emails that reference to a ForEx trader executing a specific trade from the queue.

Read Unstructured Emails to identify the currency sold, bought, executed rate & transaction ID.

Identifying various apps and services that the settlement desk interfaces with such as email, Bloomberg terminal, internal terminal / mainframe, sales credit payout system, externally available trade settlement system such as Broadridge.

Reasoning to identify the underlying formula for sales credit calculation.

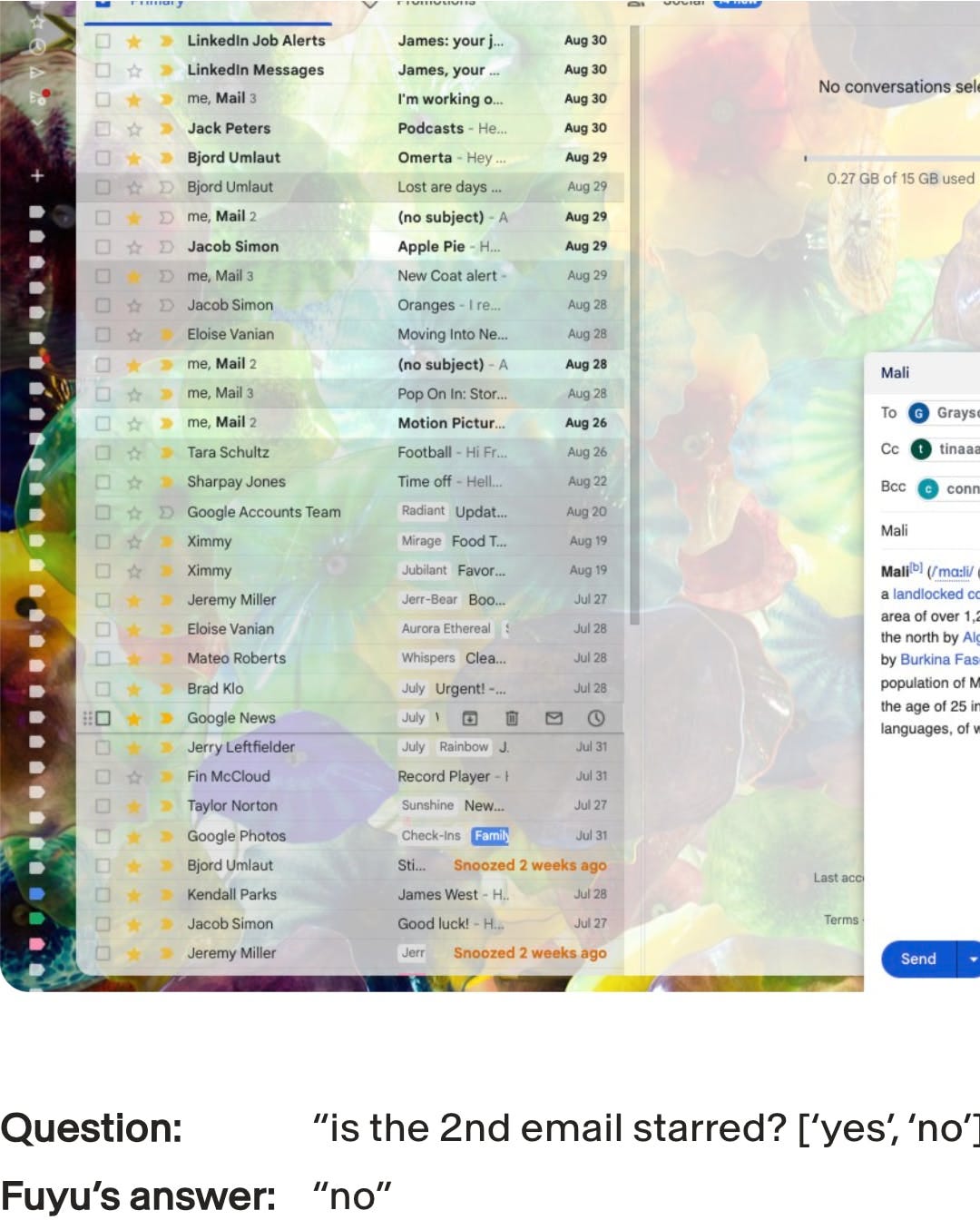

Adept AI recently released a multi-modal model for knowledge workers called Fuyu 8-B, which constitutes a simpler architecture compared to other image understanding models, and is able to answer UI-based questions on the user screen. Let’s consider a great example stated in the release notes wherein a specific workflow may involve questioning whether the second email has a star agains it or not. The above steps, applications and logic can be reasoned by multi-modal models by closely watching the user screen, sometimes over several iterations to chalk out simpler sub-tasks, identify applications and user actions.

Building & Iterating on the Workflow

Once the agent has observed the user actions across several attempts, the agent ideally would perform the following actions:

Summarize the key steps / sub-tasks in the process, applications interfaced, data exchanged from defined input & output sources, key triggers etc.

Identify and present the initial skeleton with built in triggers, actions, logical constructs and branches with different outcomes while capturing all the edge cases & exceptions.

Test run various scenarios in a guided way with controller user inputs to test robustness and functionality

Present the user with specific areas where the agent requires more context to clearly articulate the process steps, branches & exceptions.

Identify SLAs, runtime constraints and deploy multiple instances at specific speeds as needed to meet actual volumes

Allow configuration of channels for the user to interface with the agent such as a guided forms based app / widget interface, web form, chat or voice based interfaces. The capability to structure and present this information in a guided & easy to understand manner is key to building a robust and scalable agent alongside the user through automated workflow discovery & development.

Interfacing w/ Various Apps to Perform Actions

Putting the example into context, modern workflow agents powered by Large Language Models have the capability to interface with applications and services of various types in the following ways:

Interaction with applications via REST APIs can be achieved for applications such as Bloomberg and Broadridge built on a micro-services architecture, exposing APIs that can be harnessed via built in plug-ins or auto generated code by the agents.

Interaction with legacy applications may require emulation / mimicking of human actions due to lack of integration capabilities. It’s more common for traditional industries to use such applications which are slow to migrate due to the tech debt and the scale at which they operate, which prohibits them to move to modern application stacks for long periods of time.

Interaction with AI native third party autonomous systems which may involve exchange of data on a lower level semantic layer to facilitate lower latency or efficiency. In this case, specific trading systems may be fully autonomous in nature, built on AI native algorithms. This is a growing area of research as model architectures evolve.

Here is a fun post on X with an example of how Adept interfaces with various applications w/o API capability natively on a browser using Fuyu 8-B.

Role of Human-In-The-Loop driven Fine Tuning

The key problems / hindrances for agents to work consistently over long periods of time with a variety of complex apps can be largely bucketed into:

Reconfiguring actions to adjust to frequent changes in user interfaces including changing button positions, UI styles , for applications with no API capability which otherwise requires a ton of refactoring, thus added more time to develop & maintain agents.

Seamlessly flagging errors and absorbing user input during execution to accomodate for any unaccounted edge cases, error scenarios, build robust rollback and handling mechanisms over time which creates a true human-in-the-loop / continually learning agent as the workflow evolves.

Supervised Fine Turning and Reward modelling have a great role to play but can be expensive and time consuming with shorter feedback loops. This is another area of growing research, and methods such as Direct Preference Optimization may pave the stepping stones for efficient HITL workflow agents with reduced human touch over long periods of usage.

Agent Deployment / Orchestration

Putting the use case / example into context, the following considerations need to be kept in mind:

The implementation of such agents may often require the agent to run locally on an on-premise container or the end-user machine, based on the sensitivity of information handled by the agents. There have been various advancements in locally executed multi-modal language models such as Tiny GPT-V, Apple Ferret and most notably, Phi 2 by Microsoft that are able to run locally on a user machine and perform at considerably high levels of accuracy with text & images. The key advancement to watch would be the availability of chipsets that can power such expensive computations on user devices.

Maximization of the user experience involves splitting the end to end process into sub-tasks that can be done asynchronously in the background and tasks that require a user input for guidance. Putting the example into context, this can be explained using the following visual:

Notable Open Source Projects

While researching on workflow agents, I came across some great open source projects that are bringing the above vision to action:

Open Interpreter

Open Interpreter, a project that facilitates running code on a local machines recently released an update that enables a user to interface with a computer screen using vision models and general interaction APIs. This is indicative of the movement towards local execution, which is especially beneficial for high volume enterprise workflows tasks done in secure environments.

App Agents

While modern day workflow agents have typically been limited to desktop screens, a lot of enterprise applications are now native to mobile with crucial tasks also being done on mobile. Agent frameworks such as AppAgents provide the capability to run such automations natively on mobile, thus extending capability beyond desktop.

Wrap up

Thinking of this more philosophically, a case can be made that a Cambrian explosion and adoption of AI native applications and services could possibly bring in a new paradigm of user interfaces beyond the “knobs-and-dials” interfaces of today, thus introducing new types of data exchange mechanisms where underlying models exchange data & information differently, hence creating a new paradigm of APIs. This may yield the capability of mimicking human actions on existing software types largely archaic and less valuable. However, adoption curves, especially for knowledge workers are often very slow, due to the complexity of organizational structures and their processes, which creates technical debt. Information & application silos are a constant occurrence for knowledge workers and enterprises. Thus, applicability of such agents shouldn’t be viewed as a bandaid, but rather a stepping stone for organizations to transition to more modernized and consistent application interface. The nature of human machine interaction may evolve over time and we may be mimicking evolved human-machine interaction types than the current day actions such as “clicking on buttons, dragging & dropping, scrolling etc.”. This maintains defensibility & relevance for this area of technology, which will evolve along with the new technology paradigm.