The path to profitability for Large Language Models

The cheaper, faster and better era for AI infrastructure in 2024 and beyond

TL;DR

From a “low-key research preview” to 180 million monthly active users as of Jan 2024, OpenAI’s ChatGPT marked the explosion of a sprawling AI community across open source and proprietary models. In the week before the date of writing this article, there were 140 open source models released on Hugging Face Open Model Leaderboard. Here are a few trends that explain the growth curve and its shift from accuracy and breadth to efficiency and depth:

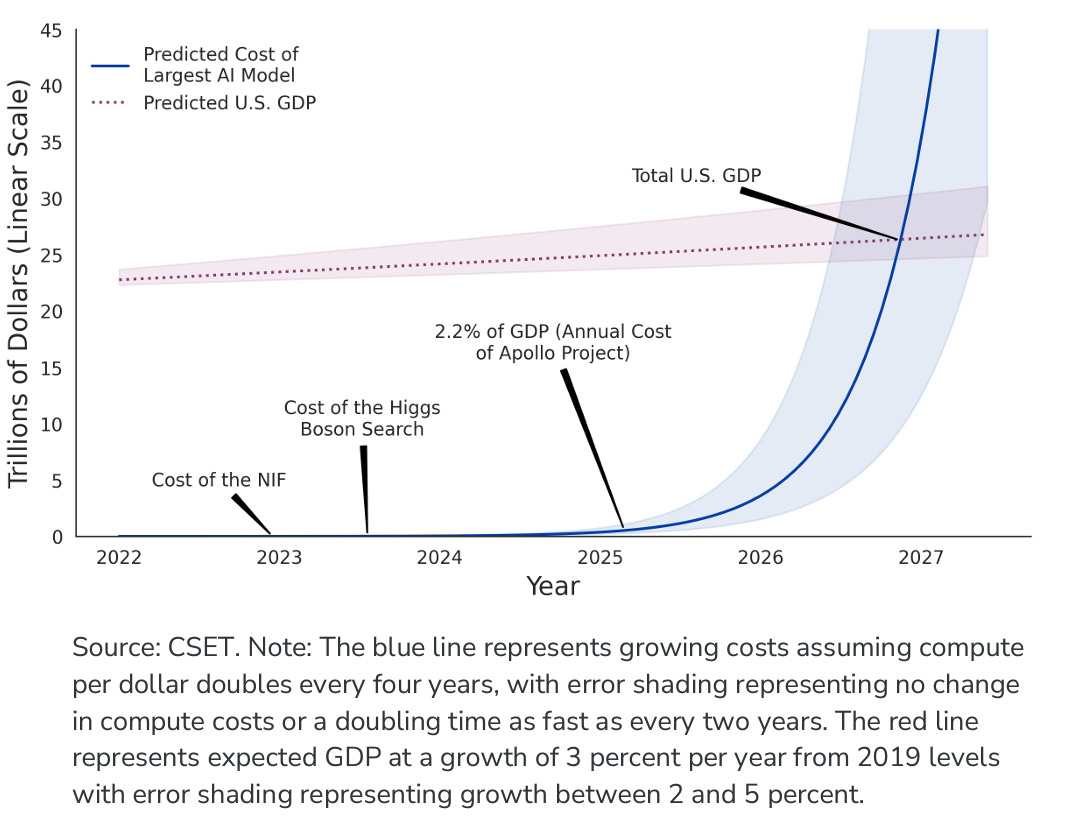

NVIDIA sold a whopping 550K H100s across big and small AI vendors, creating immense revenue growth.

With the current computational loads, AI is forecasted to consume 85-134 Terawatt hours by 2027, similar to what Argentina, Sweden and Netherlands use in a year

Investment in AI followed a pre-ZIRP (Zero Interest Rate Policy) anomaly of growth at all costs in 2023, while the higher cost of capital demanded early & late stage private business models to generate cash flow and profitability

In late 2023, research shifted towards smaller models like Mistral 7B, focusing on training with high-quality, smaller datasets, reducing human supervision for fine-tuning, and developing model compression and low-cost deployment techniques.

Going from proof-of-concept to production, to a hyper scale business requires sustainable unit economics that has to stem from the ground up model architecture and design to efficient deployment.

Let’s dive deeper into how research in the last few months is paving the path via various creative solutions that accelerate this trend. For anyone looking for a quick primer on fundamental concepts on LLM training, I would highly recommend State of GPT by Andrej Karpathy or this article.

Dissecting existing unit economics of AI models

The human brain is an amazingly energy-efficient device. In computing terms, it can perform the equivalent of an exaflop — a billion-billion (1 followed by 18 zeros) mathematical operations per second — with just 20 watts of power. On the other hand, large language models still have a long way to go, in terms of computational and energy efficiency, as they use a significant amount of GPU compute and energy to be trained from scratch and make inferences.

Capital Expenditure: Meta's acquisition of 350,000 NVIDIA GPUs for about $10 billion highlights the significant capital required for AI infrastructure. Meta reportedly spent $20 million training Llama 2, while OpenAI's daily expenses were about $700,000, or 36 cents per query, as of April 2023.

Training Time: Meta’s Llama 1 took approximately 21 days to train on 1.4 trillion tokens on 2048 NVIDIA A100 GPUs which is a substantial amount of time to train models on base data sets that can expand and evolve.

Gross Margin: Anthropic on the other hand is estimated to have gross margin of 50-55% as opposed to a typical 80% gross margin profile often boasted by SaaS & PaaS businesses. A large part of it comes from the cost of investing in cloud compute that runs AI models at scale.

Mega Venture Funding Rounds: MANG (Microsoft, Amazon, NVIDIA, Google) recently represented $25+ billion of venture capital investment that accounted for 8% of the capital raised in North America, out of which a whopping $23 billion + was invested in Data and AI. A vast portion of this investment came in the form of cloud credits for leveraging the pool of compute that’s owned and run by the hyper scalers allocated towards model training.

While OpenAI was incredibly successful in creating a first mover’s advantage for its information retrieval & infrastructure services, to realize sustainable software unit economics, with higher gross margins & operating leverage has to evolve from the model training phase all the way up until the inference phase.

Learning from History: Data Centers in 2000s

In the late 1990s and early 2000s, the tech boom spurred a sharp increase in demand for data storage, web hosting, and internet services, escalating the need for data center space and infrastructure. This surge pushed up the costs of building and operating data centers due to significant investments in construction, power, cooling systems, and internet connectivity. At the time, less advanced cooling and energy technologies led to higher operational expenses. Additionally, real estate and energy costs soared, while supply chain issues caused by increased demand for servers and networking equipment further inflated prices. Companies heavily invested in IT infrastructure, aiming for growth but not always with profitability in sight. The dot-com bubble's burst prompted a reevaluation of such investments, revealing an oversupply in data center space and network capacity that took years to adjust. The fallout included numerous internet company failures and a shift towards more efficient data center designs and operations, paving the way for cost management improvements, thus creating sustainable gross margin behemoths like AWS.

Business Implications for AI Infrastructure

Learning from history, the key pillars for success in the coming months and years would require an amalgamation of finding efficiencies in the e2e model training & deployment process with accurate demand mapping to build profitable businesses with healthy gross margins:

Cheaper model training through efficient data collection, labelling and training methods potentially reduces recurring CapEx (for retraining models with GPU compute) with exponentially expanding public and private data, which directly impacts Free Cash Flow (FCF), thus freeing up capital for more strategic investments in R&D.

Efficient fine tuning can help reduce investments in labor intensive fine tuning and feedback techniques, managing incremental OpEx investment for improved model accuracy, and maintaining operational efficiency while improving customer retention and increasing topline.

Efficient model designs has the most direct impact on COGS (Cost of Goods Sold) and thus gross margins, through reduction in infrastructure and operational costs related to model deployment and maintenance.

In an AI economy where open source foundation models are abundant, pricing wars around providing the cheapest, fastest and best service will create margin compression which further accentuates the need for efficiency in all aspects.

How is the research community working towards building efficiencies?

Let’s dive deeper into the ongoing research in each of the phases to better understand how this may be achieved.

Cheaper and Faster Model Training

While the corpus of internet scale data for training large language models is extremely diverse, it can be unstructured, noisy, and of poor quality, and thus the training can often be compute intensive and long, or may require significant manual & computational effort in cleaning the data set. A couple of areas of research involve models creating their own training data sets or representing data sets in high quality formats.

Recent research introduces a novel method called Self Alignment using Instruction Backtranslation to enhance language model instruction-following. This technique generates training examples from vast, unlabeled datasets, like web documents, and selects the most effective ones for further training. By self-training, it significantly improves the model's ability to understand and execute instructions without extensive human-annotated data. The technique was experimented on pretrained LlaMa model, with different parameter sizes (7B, 33B, and 65B) and was proved to maintain similar levels of accuracy, while consuming less computational power

Web Rephrase Augmented Pre-training (WRAP) was recently introduced as a method to enhance the efficiency of training large language models (LLMs) by using a mix of real and synthetic (rephrased) data. Think of WRAP like a smart filter that cleans and diversifies the data before feeding it to the learning model, similar to refining raw ingredients in a gourmet meal. WRAP uses an off-the-shelf, instruction-tuned model to rephrase web documents into styles like "Wikipedia" or "question-answer" format. This approach aims to create high-quality synthetic data that complements the real, noisy web data. Experiments demonstrate that WRAP can 3X the speed of pre-training while improving model perplexity and zero-shot learning capabilities across a range of tasks.

Efficient Fine Tuning

Advanced techniques like Supervised Fine Tuning, RLHF, and Reward Modelling enhance AI performance by incorporating human feedback, improving model responses to mimic human behaviors more closely. By evaluating multiple responses from the model, human preferences shape a reward model that guides AI training, optimizing accuracy without extensive computational resources. Techniques like LoRa and QLoRa offer efficient, minimal model adjustments, facilitating large model fine-tuning on limited hardware. Some more promising research in this area includes:

Direct Preference Optimization was recently introduced as a technique that fine-tunes language models directly on preference data, bypassing traditional, more complex reinforcement learning approaches. It gets rid of is the iterative training of a separate policy model by cleverly re-parameterizing the reward model in a way that one can train a language model to optimize the reward. Results show that DPO can match or exceed the performance of existing methods in making models produce preferred outcomes for sentiment control and summarization tasks, with less computational effort. Part of what makes this exciting is that there is now an active ecosystem of small “alignment” datasets and so many people are producing DPO instruction tuned models relatively cheaply (compared to training from scratch) with different characteristics. Andrew Ng summarizes this very well in his tweet:

Self Play Fine-tuning (SPIN) was recently introduced as a training method, enhancing the performance of language models by leveraging self-play, a method where the model generates data by interacting with itself. This self-generated data serves as new training material, helping the model learn more nuanced language patterns and improve over time. The approach demonstrates a notable improvement in language model performance across several benchmarks, showcasing the method's effectiveness in enhancing model capabilities in a cost-efficient manner.

Weighted Average Reward Model (WARM) as a method proposes averaging of parameters of different reward models. This technique is akin to taking the collective wisdom from multiple expert systems to guide the decision-making of large language models more effectively. The research primarily aims to enhance RLHF alignment step for LLMs. Taking advantage of multiple LLMs created during training is particularly lightweight, and is claimed to cost less than a single model during inference time.

Efficient Model Design

The classic scaling laws of LLMs generally state that an increase in model size, dataset size, and computational power tends to have a significant impact on the model performance, but with diminishing returns beyond a certain point. For a while the consensus was to scale all three in a coordinated fashion in accordance with Chinchilla optimal scaling, whereas more recently it has become manifest that the useful thing for deployment efficiency (rather than training efficiency) is to go big on compute and data for a much smaller model. Hence we see things like GPT-3 with 175B params trained on 300B tokens but Phi-2 with 2.7B params trained on 1.4T tokens. The race for the biggest model eventually seemed to converge around similar metrics / numbers on various benchmarks.

The most famous advancement in building efficient models has been the Mixtral 8x7b release that signifies the computational efficiency of sparse mixture of experts where an ensemble model combines several smaller “expert” sub-networks, where each subnetwork is responsible for handling different types of tasks. By using multiple smaller sub-networks, MoE aims to allocate computational resources more efficiently. With 47B parameters, it is significantly smaller, yet comparable in performance to the Llama 2 70B model.

Jeff Dean (Chief Scientist at Google) talked about the concept of Pathways in 2021 which stated that the activation of a whole dense neural network to accomplish a simple task is unlike our brains, where different parts of the brain specialize on different tasks. He proposed that “sparsely activated” models can dynamically learn to activate parts of the neural network that are the most efficient for that task, which not just gives it the capability to learn a variety of tasks but also makes it faster and energy efficient. A recent paper on Improving transformers with irrelevant data from other modalities was an interesting approach that seemed to mimic the concept of interdisciplinary thinking by leveraging unrelated data sets for model training.

Matroyoshka Representation Learning is a notable method that proposes a nested structure of data representations where each layer can serve a different computational or accuracy requirement. This technique helps machine learning models adjust their complexity based on what's needed for a task. It's like having a tool that can switch between being a hammer and a screwdriver based on the job, helping balance accuracy and computational needs efficiently.

Slice GPT is an approach that can be imagined as a highly detailed and expansive library (representing a large language model) where you're trying to make space and improve efficiency without losing much information. The approach involves "slicing" - selectively removing parts of the model's weight matrices (akin to shelves/books in our library analogy). This is achieved through a novel method that ensures that the core functionality and outputs of the model remain largely unchanged despite the reduction. The method effectively reduces model size by up to 30%, with minimal impact on performance.

On-Device Multi-Modal Models

The final chasm that’s currently being crossed is the capability to run model inference locally, on existing mobile phones, laptops and potential AI native devices of the future. While desktops and powerful laptops may still have the capability to run inference for Small Language Models (SLMs) such as Mistral 7B & Phi 2 via Ollama, recent advancements through Core ML in iOS and MediaPipe on Android set some great foundations for running many on-device models. We’re already seeing work done on top of Phi 2 such as TinyGPT (2.8B parameters) and more. Here are some examples:

TinyLlama, with its 1.1 billion parameters is an accessible and affordable Small Language Model, that is cheaper to develop and pre-train, can typically be fine tuned using a single GPU and is more energy efficient which is crucial for preserving battery power on a smart phone or other edge devices. While it’s far from outperforming major LLMs, it’s intended to present better results when fine tuned for specific tasks.

Google recently released Mobile Diffusion that can run on both iOS and Android to generate 512x512 images in less than a second, with a mere 520M parameters, leveraging key techniques such as latent diffusion. It can be run on both iOS and Android, and seems to be a great step

After the release of Ferret late last year Apple seems to be making updates to its iOS 17.4 release, wherein it plans to roll out updates to Siri and messages, by rolling out the Siri summarization framework. Apple seems to be using multiple versions of its AjaxGPT models including one that is processed on device and one processed on the cloud. It is also benchmarking the model results with GPT 3.5 and FLAN-T5.

While the above research is promising, running models locally on devices such as laptops & mobile phones without consuming significant memory, compute and battery power is yet to be seen at a large scale and based on Apple’s and Google’s announcements, there’s optimism that this can be brought to production scale, at-least for specific tasks & features. Moreover, small to medium sized models may have weaker on general knowledge and for acting as a dialog partners.

This is great analysis, Siddhant.