Evolution from Single to Multi-Threaded Agent Workflows

Dissecting the incremental value unlock from "Assistants" to "Agents"

TL;DR

From Assistants to Agents: Initially designed as basic tools for answering tasks, AI chat assistants have advanced into autonomous agents capable of solving complex, multi-faceted problems.

Limitations of Single-threaded Interfaces: These traditional chat interfaces, while effective for linear tasks, struggle with complex queries that require broader contextual understanding and multi-step reasoning.

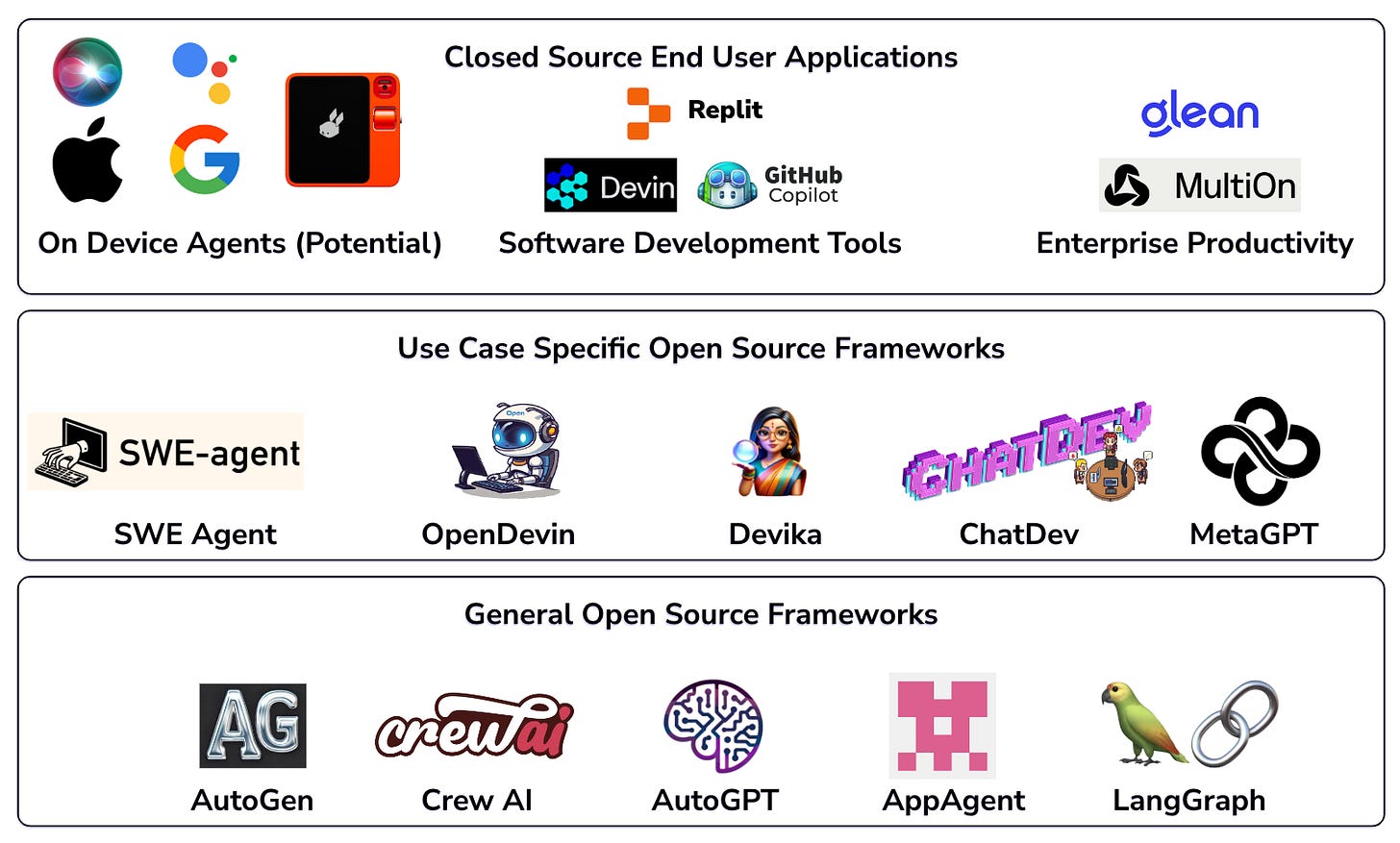

Open-Source Frameworks: Open-source frameworks have been instrumental in enhancing the capabilities of AI assistants, contributing to areas like software development, academic research, and creative content generation.

Evolving Software Ecosystem: The growing ecosystem is currently burgeoning at the infrastructure layer through frameworks such as ChatDev, MetaGPT, AutoGen, with some releases for vertical-ized tools such as Devin and Multi-On, and promise of on-device multi-threaded agents for consumer grade devices

Base Large Language Models to Helpful Chat Products

The 2017 release of the transformer architecture created an inflection point for adoption of language models. Subsequent releases of GPT 3 in 2020 & GPT 3.5 in early 2022 led to the first helpful internet-scale answering engine (ChatGPT). Base models are NOT assistants, and they merely want to complete documents by predicting the next token. This first product iteration of a base model ended up being the model tricked into serving as an assistant tasked at answering questions, using the corpus of internet knowledge that it was trained on. This led to users being able to ask deeply complex questions and get answers as opposed to getting traditional search results which created a step function in productivity gains.

Limitations of Single Threaded Chat Assistants

The way we interact with LLMs is through single-threaded chat interfaces such as ChatGPT, Perplexity, Claude, Gemini etc. wherein every search query is attached to a single threaded chat session. While this model has yielded productivity gains thus far, here are some limitations that we’ve observed:

Planning based on Broader User Intent: Queries often embody a user intent behind them, tied to a longer and much more complex task goal, that often requires planning an outline / identifying bodies of work, determining the necessary tools for each task and whether web searches are required, writing initial drafts & reflecting to iterate on multiple drafts etc.

Context Segregated Search Queries: Based on complexity, users may typically spin up multiple search queries, associated with individual tasks which are often disjointed from a context perspective, but could benefit from cross-collaboration & abstraction into a broader goal. As of today, chat products enable users to spin up multiple queries without a means to group or link them together

Context Switching within the same query: Users switch context all the time within these single threaded conversations wherein they may move from one phase of their complex goal / task to the other, to maintain continuity and ease of use. While Large Language Models offer context lengths to the order of 1 million tokens (Gemini 1.5), model accuracy reducse as the user adds more complex and varying context types as opposed to if the model is fed with a singular topic.

Task Specialization by Model: While LLMs are typically adept at a wide variety of tasks within different verticals, models fine tuned / grounded on specific data may have the capability to perform better than general purpose models.

Computational Inequality: A model spends the same amount of compute on predicting every token regardless of whether the task is complex or not, thus making it computationally very expensive. Only a portion of the model layers & nodes are leveraged for specific tasks, thus creating inefficiencies from an inference time and cost perspective.

Reflection As Andrew Ng perfectly summarized, we use LLMs in zero-shot or a few-shot prompting mode to generate final output tokens. It’s like asking someone to write an essay from start to finish, without any pauses, backspaces and review. This often leads to hallucinations over a long range of predicted output tokens reducing overall reliability of responses.

Where do multi-agent frameworks add value?

From a use case standpoint, here are some key categories / users where such multi-agent frameworks may yield the highest benefit:

Software Development Typically involves multiple activities including planning, requirements gathering, architectural design, systems design, coding, testing, bug detection and resolution done by a variety of roles such as product managers, architects, program managers, SW engineers and QA engineers. This makes it a great fit for a multi-agent style framework to solve each task separately while collaborating on a broader end goal. Github Co-Pilot Workspace is a great first example of a broader project based, task oriented & multi-agent assisted software development workflow.

Corporate & Academic Research: Research across various industries involves engaging in information search based on multiple hypotheses, driving multiple internet searches to analyze market trends, leveraging tools to build scenarios and validating external search with internal knowledge bases. This is followed by strategy creation & iteration through an iterative process of documentation (tooling). This process is multi-faceted, involves multiple steps, tools and often delegated roles. Part of OpenAI’s 600K sign-ups for ChatGPT for enterprise showcases the growing value of this specific use case.

Content Creators leverage an iterative process that involves discovering latest trends for novel content ideas, leveraging existing audience analytics to understand demographics and preferences, and experimentation to create an end product via potential trailers. Considering each step requires research, amalgamation of ideas and content creation via creative tools, multi-threaded agent workflows can help orchestrate the multi-modal content creation piece as well as augmentation with the creators’ existing audience analytics and trends via tooling integrations. Meta during its latest earnings call, talked about dedicated AI content creation tools for its advertisers as well as businesses leveraging their suite of services by creating specific AI tools for each aspect of their workflow.

Application Co-Pilots vs Unified Chat Interfaces

The most evident trade-off while designing multi-agent frameworks is whether companies building in this space should focus on building co-pilots that are deeply integrated with their existing business apps or building a completely new interface that integrates with an existing productivity suite while aggregating information for the user in one single place. This can be viewed from the following lens:

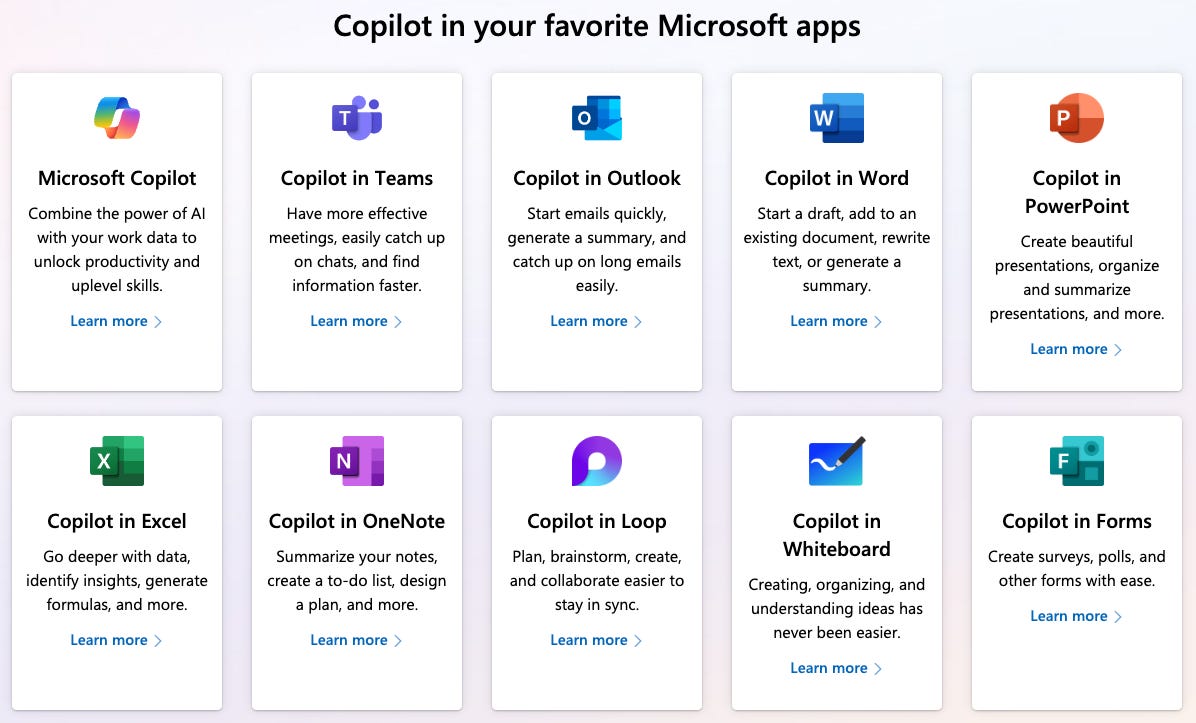

Accretive Innovation: This is currently seen within incumbents wherein multiple co-pilots have been built per underlying application. This design decision makes sense for large productivity suite companies such as Microsoft or Google, wherein the business model is based on the number of seats per tool sold to customers. These co-pilots may or may not collaborate, reflect on each other responses or help with broader user intent to solve for an overarching problem.

Disruptive Innovation: This interface may make more sense for newer companies such as OpenAI, Anthropic, Cohere and various other end user AI application builders wherein integrating across a wide variety of productivity tools, while keeping users on a centralized dashboard may make sense to justify the subscription costs (the most common business model) and provide the best user experience, while keeping it simple.

Product Architecture

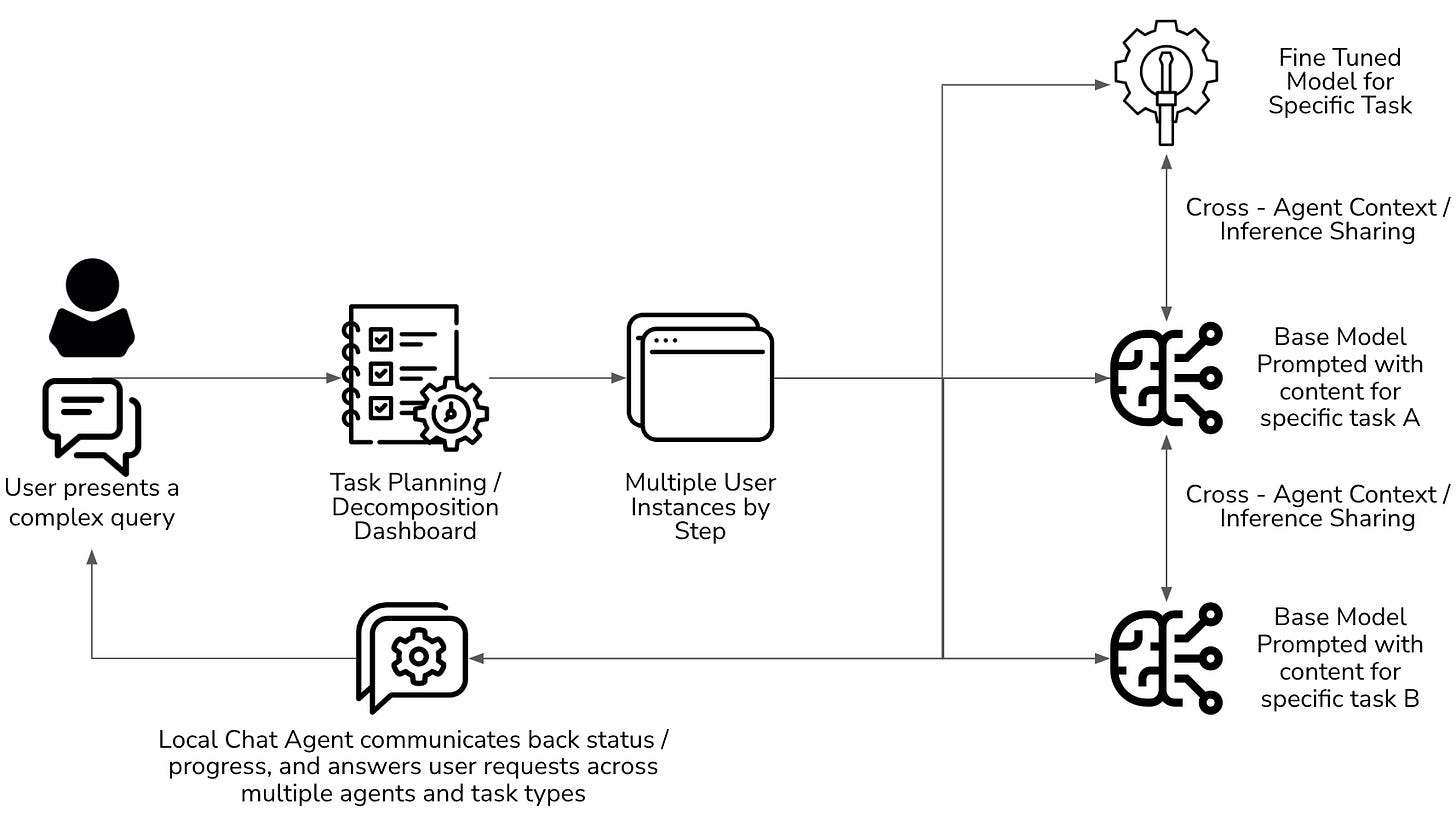

A typical multi-agent architecture can include the following nuances:

Multiple model architectures to solve for multiple modalities (diffusion driven image / video vs text based transformer models).

Multiple orchestration types such as smaller models being hosted locally on a user device for the conversational component while the larger models orchestrated on the cloud via API calls for more complex tasks.

Same models orchestrated with different contexts & prompts to generate different responses based on the decomposed sub-tasks.

Models of different sizes / fine tuned states, based on nature of task, to leverage specialized knowledge and achieve higher accuracy.

The above nuances are representative and designs can largely vary based on the use case, product constraints and accuracy requirements. Below is a high level representative architecture of what this multi-agent workflow may look like:

Ecosystem

The multi-agent framework has an existing footprint and potential across these respective layers:

Infrasturcture & Tooling: Several open source tools have gained traction recently such as AutoGen, Crew AI, ChatDev & MetaGPT that provide tools for collaborative multi-agent workflows for a broad set of, or specific use cases.

End User Applications: This is the most interesting and eventually most valuable layer where:

Verticalized applications such as Devin, MultiOn, Glean leverage multi-agent workflows for consumer and enterprise use cases while solving complex tasks from coding, workflow automation to enterprise productivity.

Large novel consumer chat interfaces interfaces such as Cohere, Gemini, Claude, ChatGPT, Meta AI etc. are largely single threaded as of today and may achieve higher user stickiness by evolving beyond the existing single threaded workflows to such multi-agent architectures. While they may be using multiple models in the background, surfacing that framework for solving complex end user problems is yet to be seen. OpenAI’s custom GPT mention seems to be a step in this direction but the chats are still largely single threaded

On-Device Agents such as the one that Apple is rumored to release may involve leveraging multiple models under the hood including OpenAI, Gemini and their own OpenELM model, deeply integrated with Spotlight, Siri and Safari as the multi-agent assistant. Device manufacturers such as Google Pixel and dedicated AI devices / pins may follow similar product designs.

Looking Ahead

In the next section of this topic, we will focus on understanding how these multi-agent frameworks can be built from a product and design lens, the technical and cost / efficiency trade offs that are common while building them and various tooling considerations that need to be kept in mind.

References & Further Reading

MetaGPT: Meta Programming for a Multi-agent Collaboration Framework - ArXiv

AutoGen: Enabling Next Generation LLM Applications via a Multi-Agent Conversation